Visual Embedder

Learn about our visual embedder model type

Input: Images and videos

Output: Embeddings

Visual embedder, also known as visual embedding, is a type of deep fine-tuned model specifically designed to generate meaningful numerical representations (embeddings) from images and video frames.

The primary goal of a visual embedder model is to transform the raw pixel values of images or video frames into a compact and high-dimensional vector. These vectors capture essential features and patterns in the visual content, enabling the model to understand and process the data in a more structured and interpretable way.

These vectors can then be used for a variety of tasks, such as:

- Visual search: This is the task of finding images or videos that are similar to a given query image or video. The visual embedder model can be used to create a similarity metric between images or videos, which can then be used to search for similar visual content in a vector database.

- Training on top of them: The visual embedder model can also be used as a starting point for training other machine learning models. For example, a model that can classify images or videos can be trained on top of the visual embedder model.

The visual embedder model type also comes with various templates that give you the control to choose the specific architecture used by your neural network, as well as define a set of hyperparameters you can use to fine-tune the way your model learns.

You may choose a visual embedder model type in cases where:

- You need a model that can accurately represent images and video frames as vectors. Once the model is trained, you can use it to embed new images or videos into vectors.

- You need an embedding model to learn new features not recognized by the existing Clarifai models. In that case, you may need to "deep fine-tune" your custom model and integrate it directly within your workflows.

- You have a custom-tailored dataset, accurate labels, and the expertise and time to fine-tune models.

Create and Train a Visual Embedder

Let's demonstrate how to create and train a visual embedder model using our API.

Before using the Python SDK, Node.js SDK, or any of our gRPC clients, ensure they are properly installed on your machine. Refer to their respective installation guides for instructions on how to install and initialize them.

Step 1: App Creation

Let's start by creating an app.

- Python SDK

from clarifai.client.user import User

#replace your "user_id"

client = User(user_id="user_id")

app = client.create_app(app_id="demo_train", base_workflow="Universal")

Step 2: Dataset Upload

Next, let’s upload the dataset that will be used to train the model to the app.

You can find the dataset we used here.

- Python SDK

#importing load_module_dataloader for calling the dataloader object in dataset.py in the local data folder

from clarifai.datasets.upload.utils import load_module_dataloader

# Construct the path to the dataset folder

module_path = os.path.join(os.getcwd().split('/models/model_train')[0],'datasets/upload/image_classification/food-101')

# Load the dataloader module using the provided function from your module

food101_dataloader = load_module_dataloader(module_path)

# Create a Clarifai dataset with the specified dataset_id

dataset = app.create_dataset(dataset_id="image_dataset")

# Upload the dataset using the provided dataloader and get the upload status

dataset.upload_dataset(dataloader=food101_dataloader,get_upload_status=True)

Step 3: Model Creation

Let's list all the available trainable model types in the Clarifai platform.

- Python SDK

print(app.list_trainable_model_types())

Output

['visual-classifier',

'visual-detector',

'visual-segmenter',

'visual-embedder',

'clusterer',

'text-classifier',

'embedding-classifier',

'text-to-text']

Next, let's select the visual-embedder model type and use it to create a model.

- Python SDK

MODEL_ID = "model_visual_embedder"

MODEL_TYPE_ID = "visual-embedder"

# Create a model by passing the model name and model type as parameter

model = app.create_model(model_id=MODEL_ID, model_type_id=MODEL_TYPE_ID)

Step 4: Template Selection

Let's list all the available training templates in the Clarifai platform.

- Python SDK

print(model.list_training_templates())

Output

['classification_angular_margin_embed',

'classification_basemodel_v1_embed',

'Clarifai_ResNet_AngularMargin',

'Clarifai_InceptionBatchNorm',

'Clarifai_ResNext']

Next, let's choose the 'Clarifai_ResNext' template to use for training our model, as demonstrated below.

Step 5: Set Up Model Parameters

You can customize the model parameters as needed before starting the training process.

- Python SDK

# Get the params for the selected template

model_params = model.get_params(template='Clarifai_ResNext')

# list the concepts to add in the params

concepts = [concept.id for concept in app.list_concepts()]

model.update_params(dataset_id = 'image_dataset',concepts = concepts)

print(model.training_params)

Output

{'dataset_id': 'image_dataset',

'dataset_version_id': '',

'concepts': ['id-hamburger', 'id-ramen', 'id-prime_rib', 'id-beignets'],

'train_params': {'invalid_data_tolerance_percent': 5.0,

'template': 'Clarifai_ResNext',

'logreg': 1.0,

'image_size': 256.0,

'batch_size': 64.0,

'init_epochs': 25.0,

'step_epochs': 7.0,

'num_epochs': 65.0,

'per_item_lrate': 7.8125e-05,

'num_items_per_epoch': 0.0}}

Step 6: Initiate Model Training

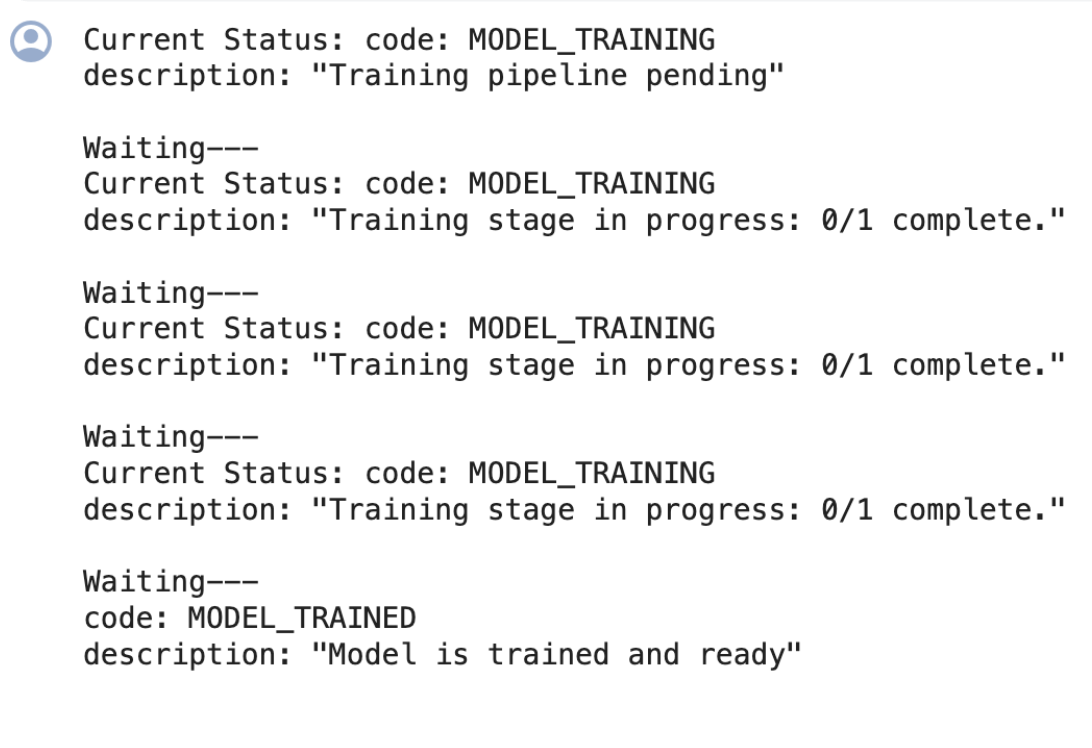

To initiate the model training process, call the model.train() method. The Clarifai API also provides features for monitoring training status and saving training logs to a local file.

If the status code is MODEL-TRAINED, it indicates that the model has been successfully trained and is ready for use.

- Python SDK

import time

#Starting the training

model_version_id = model.train()

#Checking the status of training

while True:

status = model.training_status(version_id=model_version_id,training_logs=False)

if status.code == 21106: #MODEL_TRAINING_FAILED

print(status)

break

elif status.code == 21100: #MODEL_TRAINED

print(status)

break

else:

print("Current Status:",status)

print("Waiting---")

time.sleep(120)

Output

Step 7: Model Prediction

After the model is trained and ready to use, you can run some predictions with it.

- Python SDK

IMAGE_PATH = os.path.join(os.getcwd().split('/models')[0],'datasets/upload/image_classification/food-101/images/hamburger/139558.jpg')

model_prediction = model.predict_by_filepath(IMAGE_PATH, input_type="image")

# Get the output

print(model_prediction.outputs[0].data.embeddings)

Output

embeddings {

vector: 0.021010370925068855

vector: 0.011909130029380322

vector: 2.2577569325221702e-07

vector: 0.001307532424107194

vector: 0.04247743636369705

vector: 0.01022490207105875

vector: 0.0006444881437346339

vector: 0.027988344430923462

vector: 0.028407510370016098

vector: 5.129506917000981e-06

vector: 0.03279731422662735

vector: 0.016899824142456055

vector: 0.003125722287222743

vector: 0.0

vector: 0.024156155064702034

vector: 0.04975743591785431

vector: 0.010608416981995106

vector: 0.0006941271130926907

vector: 0.00018513976829126477

vector: 2.714529364311602e-05

vector: 0.0014789806446060538

……..

}