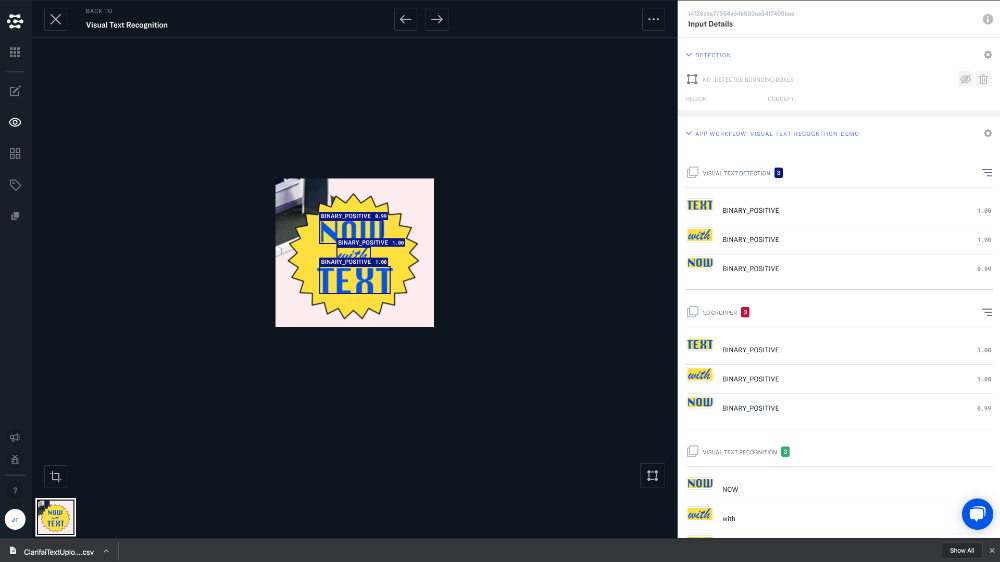

Visual Text Recognition

Work with text in images, just like you work with encoded text

Visual text recognition (VTR) helps you convert printed text in images and videos into machine-encoded text. You can input a scanned document, a photo of a document, a scene-photo (such as the text on signs and billboards), or text superimposed on an image (such as in a meme), and output the words and individual characters present in the images.

VTR lets you "digitize" text so that it can be edited, searched, stored, displayed, and analyzed.

The current version of our VTR model is not designed for use with handwritten text or documents with tightly-packed text—like you might see on the page of a novel, for example.

How VTR Works

VTR works by first detecting the location of text in your photos or video frames, then cropping the region where the text is present, and then finally running a specialized classification model that will extract text from the cropped image. To accomplish these different tasks, you will need to configure a workflow.

You will then add these three models to your workflow:

- Visual Text Detection

- 1.0 Cropper

- Visual Text Recognition

The initialization code used in the following example is outlined in detail on the client installation page.

Building a VTR Workflow

- gRPC Python

- gRPC NodeJS

- gRPC Java

- cURL

###################################################################################

# In this section, we set the user authentication, app ID, and the details of the

# VTR Workflow we want to build. Change these strings to run your own example.

##################################################################################

USER_ID = 'YOUR_USER_ID_HERE'

# Your PAT (Personal Access Token) can be found in the Account's Security section

PAT = 'YOUR_PAT_HERE'

APP_ID = 'YOUR_APP_ID_HERE'

# Change these to build your own VTR Workflow

WORKFLOW_ID = 'visual-text-recognition-id'

WORKFLOWNODE_ID_1 = 'detect-concept'

MODEL_ID_1 = '2419e2eae04d04f820e5cf3aba42d0c7'

MODEL_VERSION_ID_1 = '75a5b92a0dec436a891b5ad224ac9170'

WORKFLOWNODE_ID_2 = 'image-crop'

MODEL_ID_2 = 'ce3f5832af7a4e56ae310d696cbbefd8'

MODEL_VERSION_ID_2 = 'a78efb13f7774433aa2fd4864f41f0e6'

WORKFLOWNODE_ID_3 = 'image-to-text'

MODEL_ID_3 = '9fe78b4150a52794f86f237770141b33'

MODEL_VERSION_ID_3 = 'd94413e582f341f68884cac72dbd2c7b'

##########################################################################

# YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

##########################################################################

from clarifai_grpc.channel.clarifai_channel import ClarifaiChannel

from clarifai_grpc.grpc.api import resources_pb2, service_pb2, service_pb2_grpc

from clarifai_grpc.grpc.api.status import status_code_pb2

channel = ClarifaiChannel.get_grpc_channel()

stub = service_pb2_grpc.V2Stub(channel)

metadata = (('authorization', 'Key ' + PAT),)

userDataObject = resources_pb2.UserAppIDSet(user_id=USER_ID, app_id=APP_ID) # The userDataObject is required when using a PAT

post_workflows_response = stub.PostWorkflows(

service_pb2.PostWorkflowsRequest(

user_app_id=userDataObject,

workflows=[

resources_pb2.Workflow(

id=WORKFLOW_ID,

nodes=[

resources_pb2.WorkflowNode(

id=WORKFLOWNODE_ID_1,

model=resources_pb2.Model(

id=MODEL_ID_1,

model_version=resources_pb2.ModelVersion(

id=MODEL_VERSION_ID_1

)

)

),

resources_pb2.WorkflowNode(

id=WORKFLOWNODE_ID_2,

model=resources_pb2.Model(

id=MODEL_ID_2,

model_version=resources_pb2.ModelVersion(

id=MODEL_VERSION_ID_2

)

),

node_inputs=[

resources_pb2.NodeInput(node_id=WORKFLOWNODE_ID_1)

]

),

resources_pb2.WorkflowNode(

id=WORKFLOWNODE_ID_3,

model=resources_pb2.Model(

id=MODEL_ID_3,

model_version=resources_pb2.ModelVersion(

id=MODEL_VERSION_ID_3

)

),

node_inputs=[

resources_pb2.NodeInput(node_id=WORKFLOWNODE_ID_2)

]

),

]

)

]

),

metadata=metadata

)

if post_workflows_response.status.code != status_code_pb2.SUCCESS:

raise Exception("Post workflows failed, status: " + post_workflows_response.status.description)

//index.js file

/////////////////////////////////////////////////////////////////////////////////////

// In this section, we set the user authentication, app ID, and the details of the

// VTR Workflow we want to build. Change these strings to run your own example.

/////////////////////////////////////////////////////////////////////////////////////

const USER_ID = 'YOUR_USER_ID_HERE';

// Your PAT (Personal Access Token) can be found in the Account's Security section

const PAT = 'YOUR_PAT_HERE';

const APP_ID = 'YOUR_APP_ID_HERE';

// Change these to build your own VTR Workflow

const WORKFLOW_ID = 'visual-text-recognition-id';

const WORKFLOWNODE_ID_1 = 'detect-concept';

const MODEL_ID_1 = '2419e2eae04d04f820e5cf3aba42d0c7';

const MODEL_VERSION_ID_1 = '75a5b92a0dec436a891b5ad224ac9170';

const WORKFLOWNODE_ID_2 = 'image-crop';

const MODEL_ID_2 = 'ce3f5832af7a4e56ae310d696cbbefd8';

const MODEL_VERSION_ID_2 = 'a78efb13f7774433aa2fd4864f41f0e6';

const WORKFLOWNODE_ID_3 = 'image-to-text';

const MODEL_ID_3 = '9fe78b4150a52794f86f237770141b33';

const MODEL_VERSION_ID_3 = 'd94413e582f341f68884cac72dbd2c7b';

/////////////////////////////////////////////////////////////////////////////

// YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

/////////////////////////////////////////////////////////////////////////////

const { ClarifaiStub, grpc } = require("clarifai-nodejs-grpc");

const stub = ClarifaiStub.grpc();

// This will be used by every Clarifai endpoint call

const metadata = new grpc.Metadata();

metadata.set("authorization", "Key " + PAT);

stub.PostWorkflows(

{

user_app_id: {

user_id: USER_ID,

app_id: APP_ID

},

workflows: [

{

id: WORKFLOW_ID,

nodes: [

{

id: WORKFLOWNODE_ID_1,

model: {

id: MODEL_ID_1,

model_version: {

id: MODEL_VERSION_ID_1

}

}

},

{

id: WORKFLOWNODE_ID_2,

model: {

id: MODEL_ID_2,

model_version: {

id: MODEL_VERSION_ID_2

}

},

node_inputs: [

{

node_id: WORKFLOWNODE_ID_1

}

]

},

{

id: WORKFLOWNODE_ID_3,

model: {

id: MODEL_ID_3,

model_version: {

id: MODEL_VERSION_ID_3

}

},

node_inputs: [

{

node_id: WORKFLOWNODE_ID_2

}

]

}

]

}

]

},

metadata,

(err, response) => {

if (err) {

throw new Error(err);

}

if (response.status.code !== 10000) {

console.log(response.status);

throw new Error("Post workflows failed, status: " + response.status.description);

}

}

);

package com.clarifai.example;

import com.clarifai.channel.ClarifaiChannel;

import com.clarifai.credentials.ClarifaiCallCredentials;

import com.clarifai.grpc.api.*;

import com.clarifai.grpc.api.status.StatusCode;

public class ClarifaiExample {

/////////////////////////////////////////////////////////////////////////////////////

// In this section, we set the user authentication, app ID, and the details of the

// VTR Workflow we want to build. Change these strings to run your own example.

/////////////////////////////////////////////////////////////////////////////////////

static final String USER_ID = "YOUR_USER_ID_HERE";

//Your PAT (Personal Access Token) can be found in the portal under Authentication

static final String PAT = "YOUR_PAT_HERE";

static final String APP_ID = "YOUR_APP_ID_HERE";

// Change these to build your own VTR Workflow

static final String WORKFLOW_ID = "visual-text-recognition-id";

static final String WORKFLOWNODE_ID_1 = "detect-concept";

static final String MODEL_ID_1 = "2419e2eae04d04f820e5cf3aba42d0c7";

static final String MODEL_VERSION_ID_1 = "75a5b92a0dec436a891b5ad224ac9170";

static final String WORKFLOWNODE_ID_2 = "image-crop";

static final String MODEL_ID_2 = "ce3f5832af7a4e56ae310d696cbbefd8";

static final String MODEL_VERSION_ID_2 = "a78efb13f7774433aa2fd4864f41f0e6";

static final String WORKFLOWNODE_ID_3 = "image-to-text";

static final String MODEL_ID_3 = "9fe78b4150a52794f86f237770141b33";

static final String MODEL_VERSION_ID_3 = "d94413e582f341f68884cac72dbd2c7b";

///////////////////////////////////////////////////////////////////////////////////

// YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

///////////////////////////////////////////////////////////////////////////////////

public static void main(String[] args) {

V2Grpc.V2BlockingStub stub = V2Grpc.newBlockingStub(ClarifaiChannel.INSTANCE.getGrpcChannel())

.withCallCredentials(new ClarifaiCallCredentials(PAT));

MultiWorkflowResponse postWorkflowsResponse = stub.postWorkflows(

PostWorkflowsRequest.newBuilder()

.setUserAppId(UserAppIDSet.newBuilder().setUserId(USER_ID).setAppId(APP_ID))

.addWorkflows(

Workflow.newBuilder()

.setId(WORKFLOW_ID)

.addNodes(

WorkflowNode.newBuilder()

.setId(WORKFLOWNODE_ID_1)

.setModel(

Model.newBuilder()

.setId(MODEL_ID_1)

.setModelVersion(

ModelVersion.newBuilder()

.setId(MODEL_VERSION_ID_1)

)

)

)

.addNodes(

WorkflowNode.newBuilder()

.setId(WORKFLOWNODE_ID_2)

.setModel(

Model.newBuilder()

.setId(MODEL_ID_2)

.setModelVersion(

ModelVersion.newBuilder()

.setId(MODEL_VERSION_ID_2)

)

)

.addNodeInputs(NodeInput.newBuilder().setNodeId(WORKFLOWNODE_ID_1))

)

.addNodes(

WorkflowNode.newBuilder()

.setId(WORKFLOWNODE_ID_3)

.setModel(

Model.newBuilder()

.setId(MODEL_ID_3)

.setModelVersion(

ModelVersion.newBuilder()

.setId(MODEL_VERSION_ID_3)

)

)

.addNodeInputs(NodeInput.newBuilder().setNodeId(WORKFLOWNODE_ID_2))

)

)

.build()

);

if (postWorkflowsResponse.getStatus().getCode() != StatusCode.SUCCESS) {

throw new RuntimeException("Post workflows failed, status: " + postWorkflowsResponse.getStatus());

}

}

}

curl -X POST "https://api.clarifai.com/v2/users/YOUR_USER_ID_HERE/apps/YOUR_APP_ID_HERE/workflows" \

-H "Authorization: Key YOUR_PAT_HERE" \

-H "Content-Type: application/json" \

--data-raw '{

"workflows": [

{

"id": "visual-text-recognition-id",

"nodes": [

{

"id": "detect-concept",

"model": {

"id": "2419e2eae04d04f820e5cf3aba42d0c7",

"model_version": {

"id": "75a5b92a0dec436a891b5ad224ac9170"

}

}

},

{

"id": "image-crop",

"model": {

"id": "ce3f5832af7a4e56ae310d696cbbefd8",

"model_version": {

"id": "a78efb13f7774433aa2fd4864f41f0e6"

}

},

"node_inputs": [

{

"node_id": "general-concept"

}

]

},

{

"id": "image-to-text",

"model": {

"id": "9fe78b4150a52794f86f237770141b33",

"model_version": {

"id": "d94413e582f341f68884cac72dbd2c7b"

}

},

"node_inputs": [

{

"node_id": "image-crop"

}

]

}

]

}

]

}'