Deploy a Model

Deploy a model into your created cluster and nodepool

Clarifai’s Compute Orchestration provides efficient capabilities for you to deploy any model on any compute infrastructure, at any scale.

You can configure your compute environment and deploy your models into nodepools with your preferred settings, optimizing for both cost and scalability.

With model deployment, you can quickly take a trained model and set it up for inference.

Learn how deployment works when making a prediction using our Compute Orchestration capabilities here.

Via the UI

Each model or workflow can only have one deployment per nodepool.

Step 1: Start Creating a Deployment

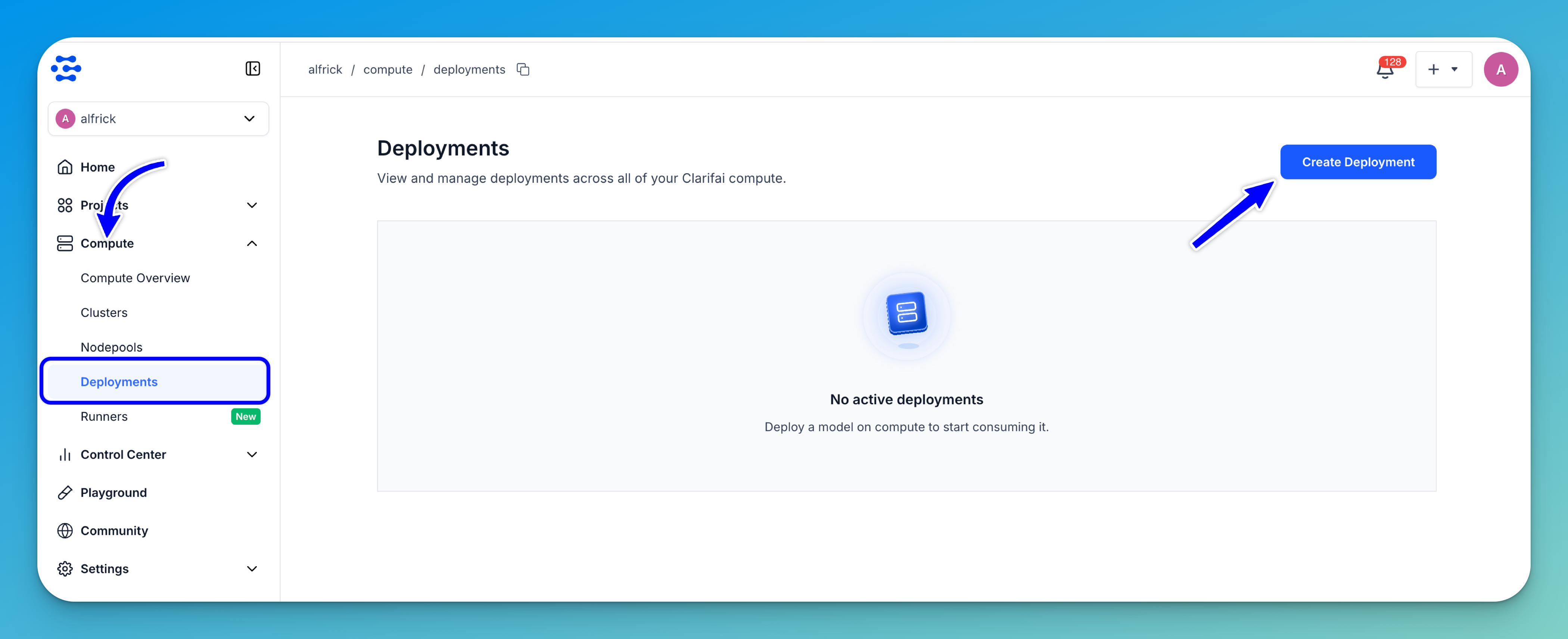

To create a deployment, go to the Compute option in the collapsible left sidebar. Then, select Deployments from the dropdown list.

You'll be redirected to the Deployments page, where you can view and manage deployments across your Clarifai compute resources.

To start creating a deployment, click the Create Deployment button in the upper-right corner of the page.

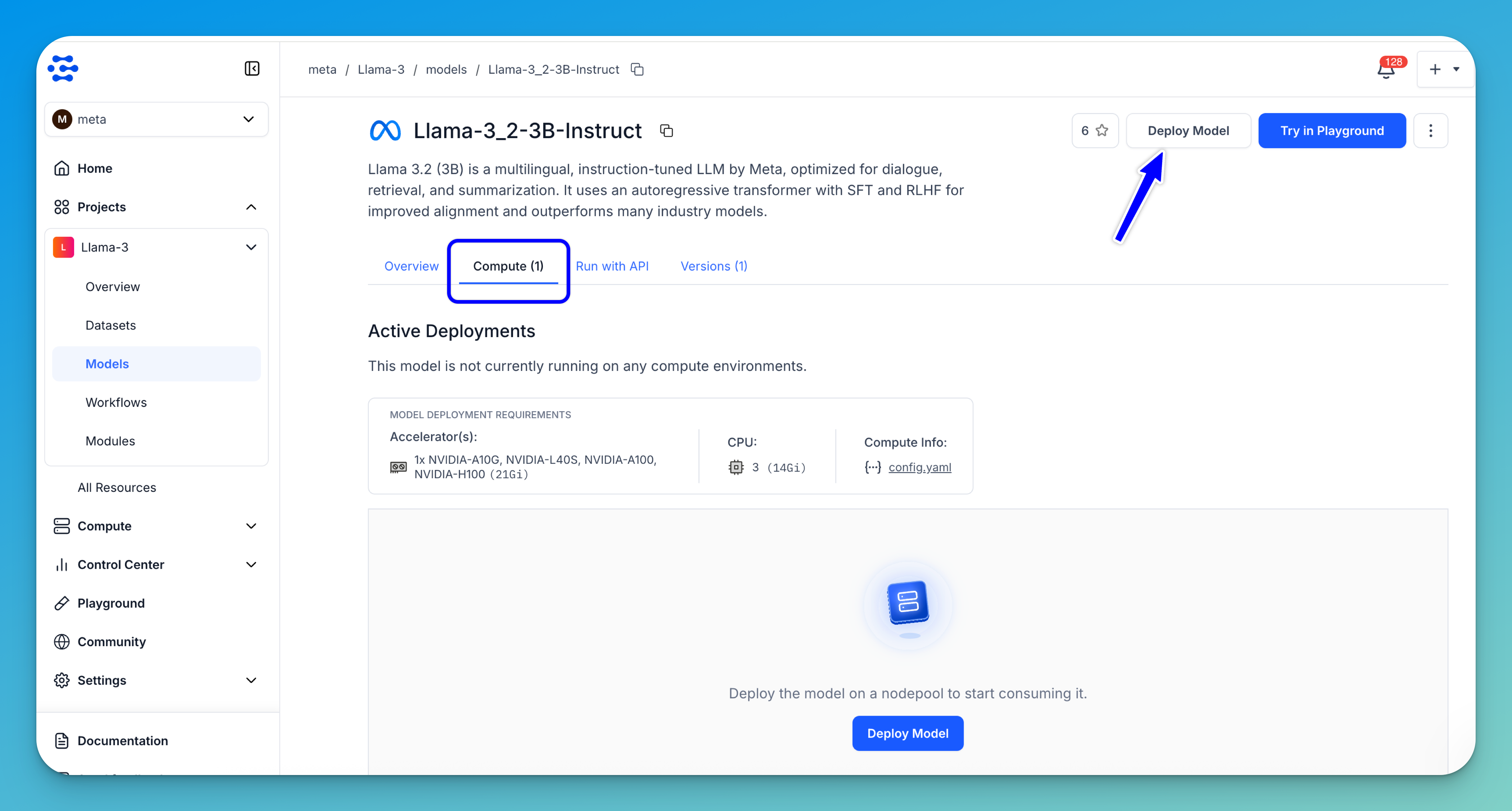

You can create a deployment directly from other areas within the platform. To deploy from a specific model, go to the individual page of the model you want to deploy and click the Deploy Model button. You can also create a deployment from a particular cluster or nodepool by navigating to its page and clicking Deploy Model there.

Note: On an individual model page, you can also open the Compute tab to check if it is already running on any compute environments. This tab displays the compute requirements needed for successfully deploying the model, allowing you to choose a configuration that meets those requirements.

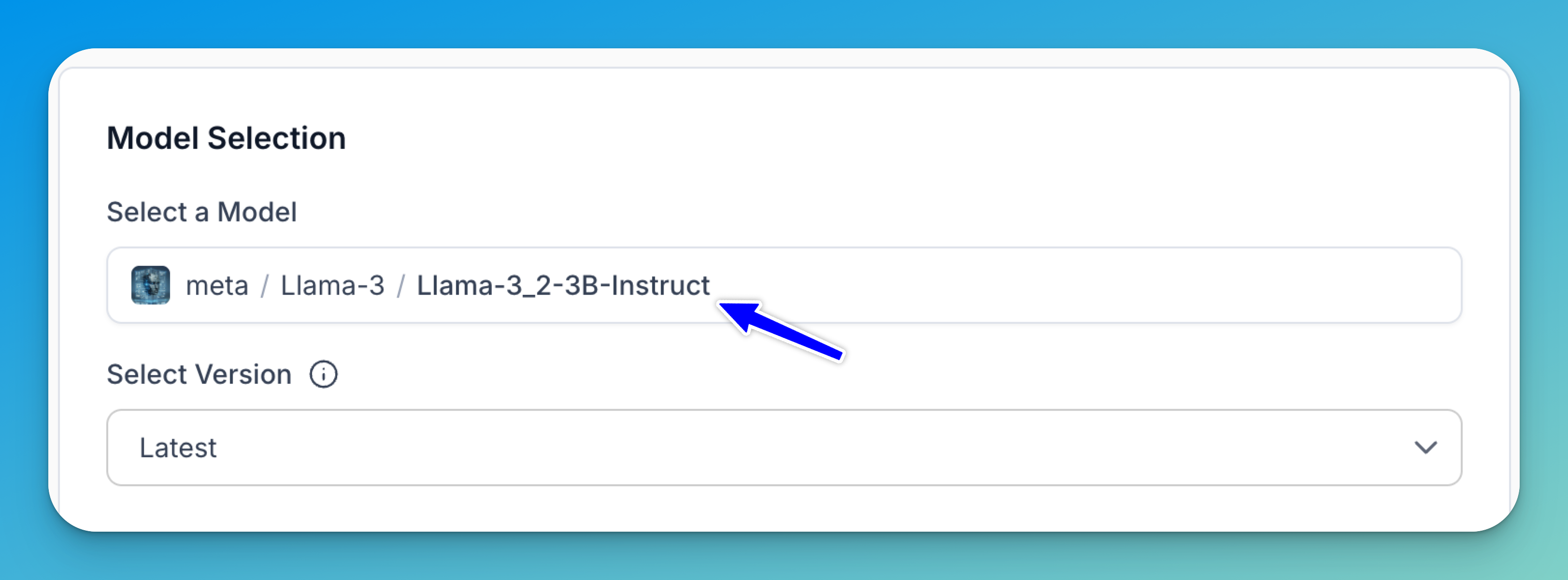

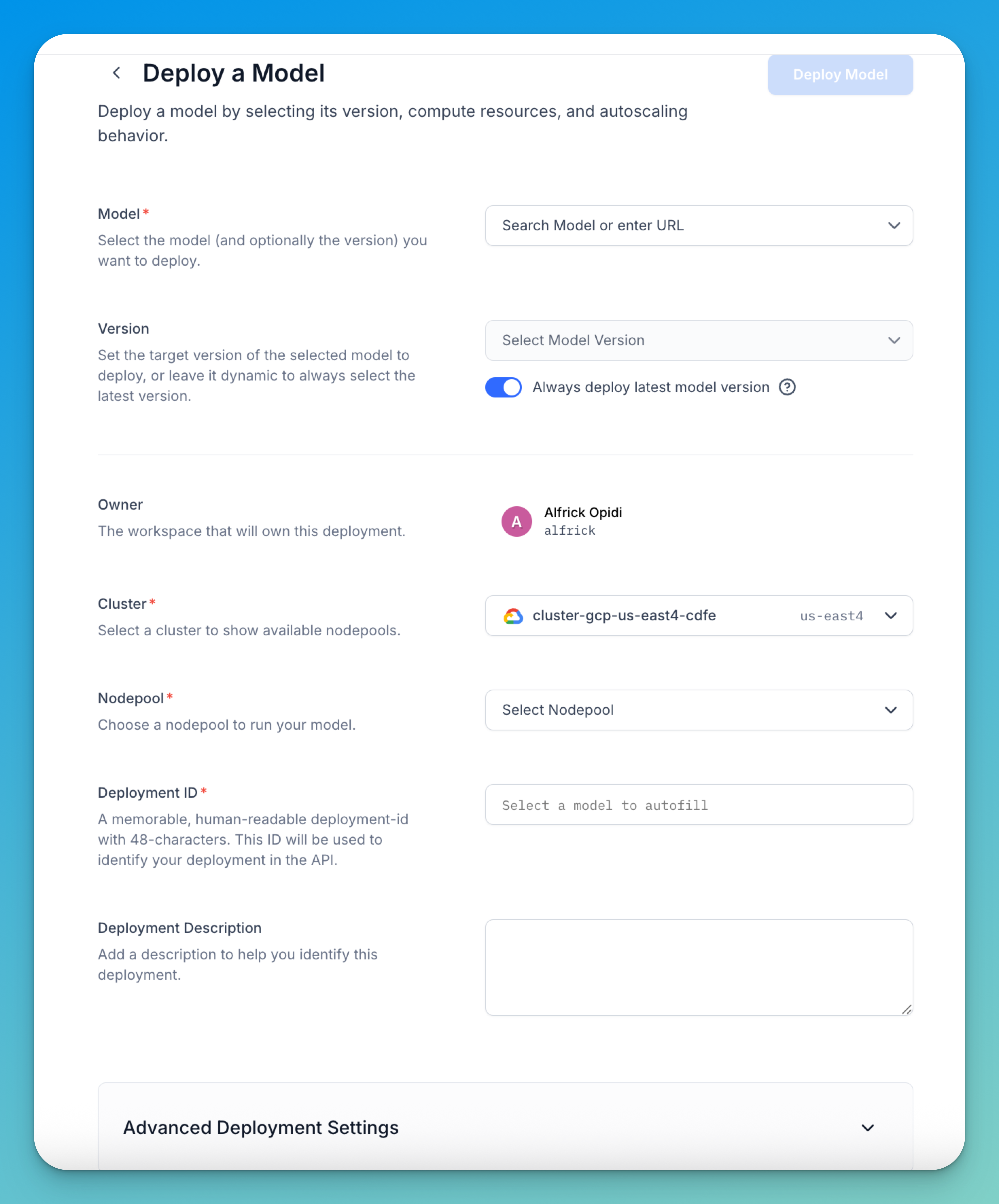

Step 2: Select a Model

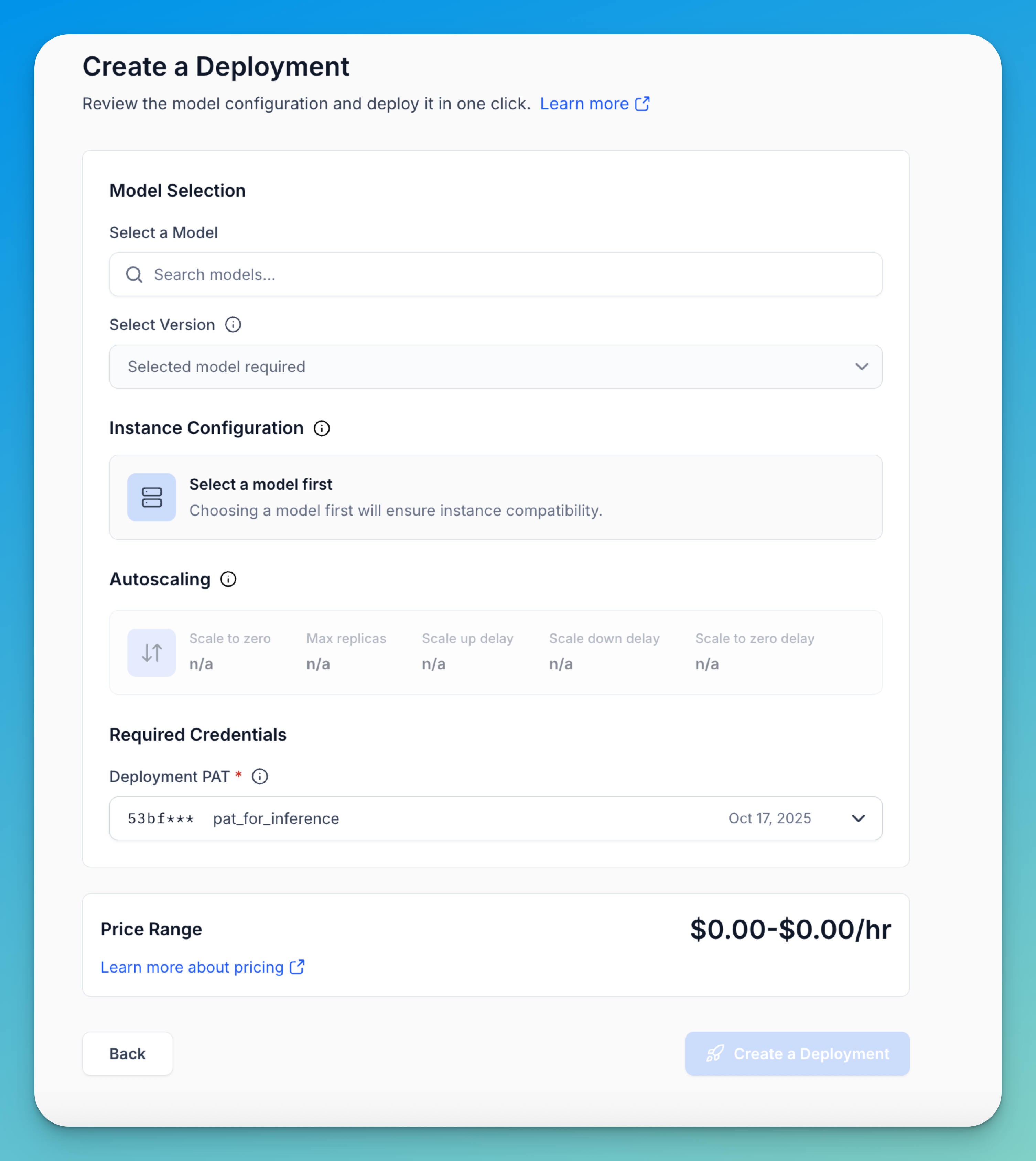

You’ll be redirected to a page where you can configure your model's compute settings and deploy it in one click.

If you haven’t already selected a trained model, you can do so here.

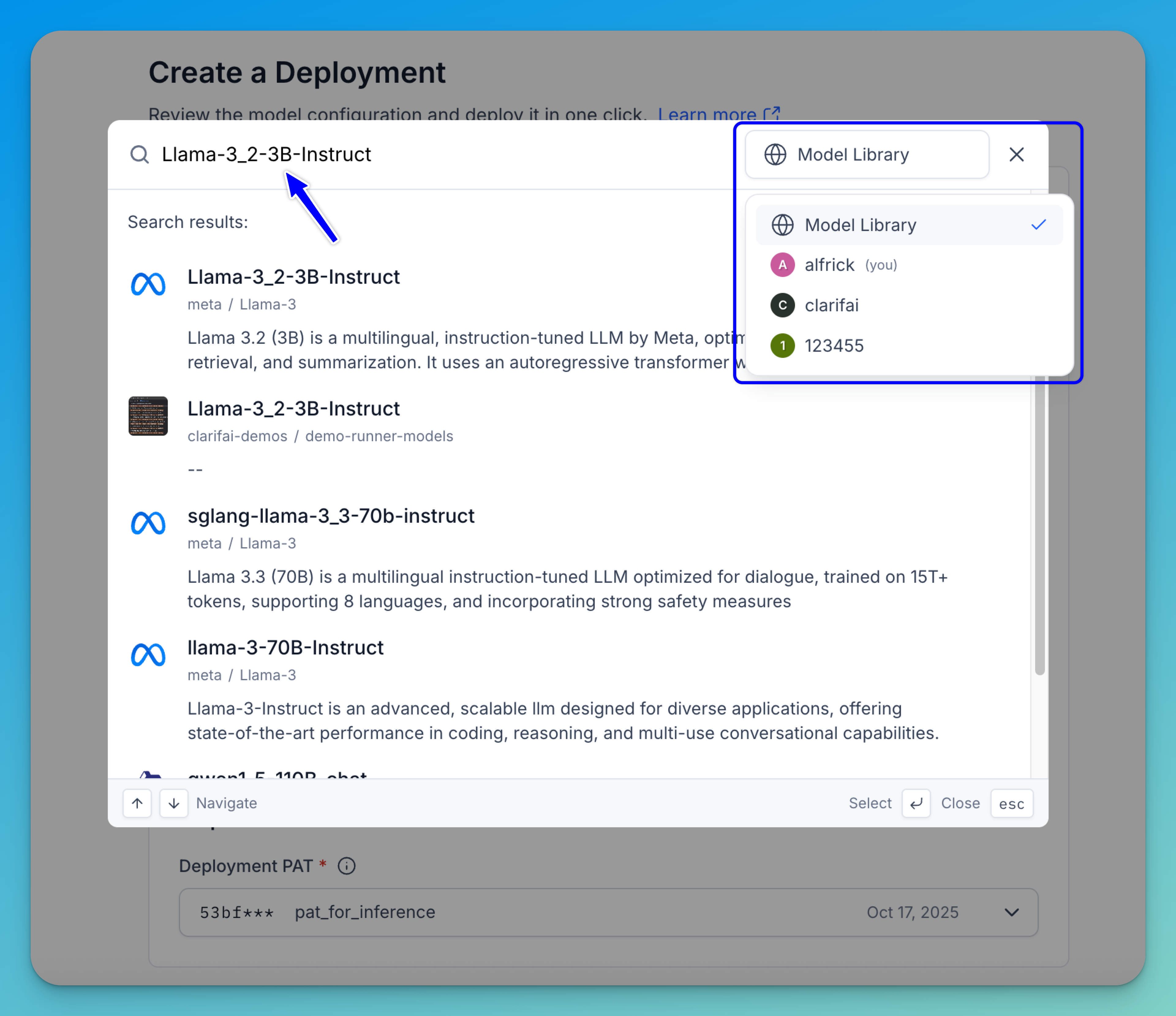

When you click the Search models... field, a selection window appears, allowing you to choose which model to deploy. You can browse through the listed models or use the search bar to quickly find a specific one.

In the upper-right corner of the window, there’s an account selector that lets you switch between different sources — such as your personal workspace, any organizations you belong to, or the public Clarifai model library. Selecting a different source updates the list to show the available models from that account or library.

After selecting your desired model, it will be listed in the Select a Model field. By default, the latest version of the model will be used, unless you use the Select Version field to manually select a different version.

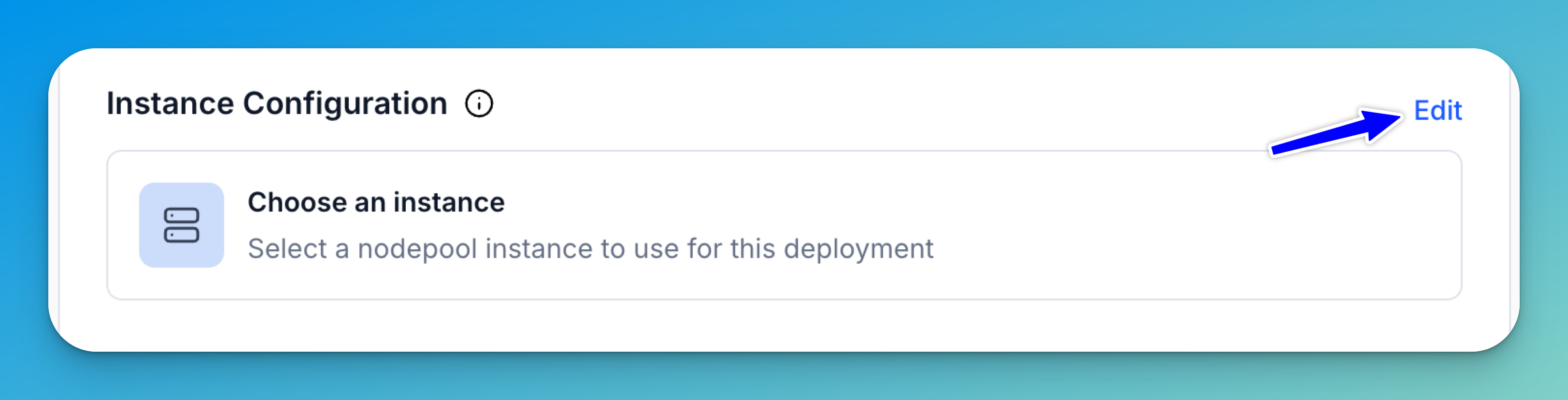

Step 3: Configure an Instance

Next, choose a cloud instance type and hardware configuration that best fits your model’s compute requirements and performance goals. See Supported Cloud Instances for a full list of available GPUs, TPUs, and CPU-only options across all cloud providers.

To do this, select Choose an instance or click the Edit button.

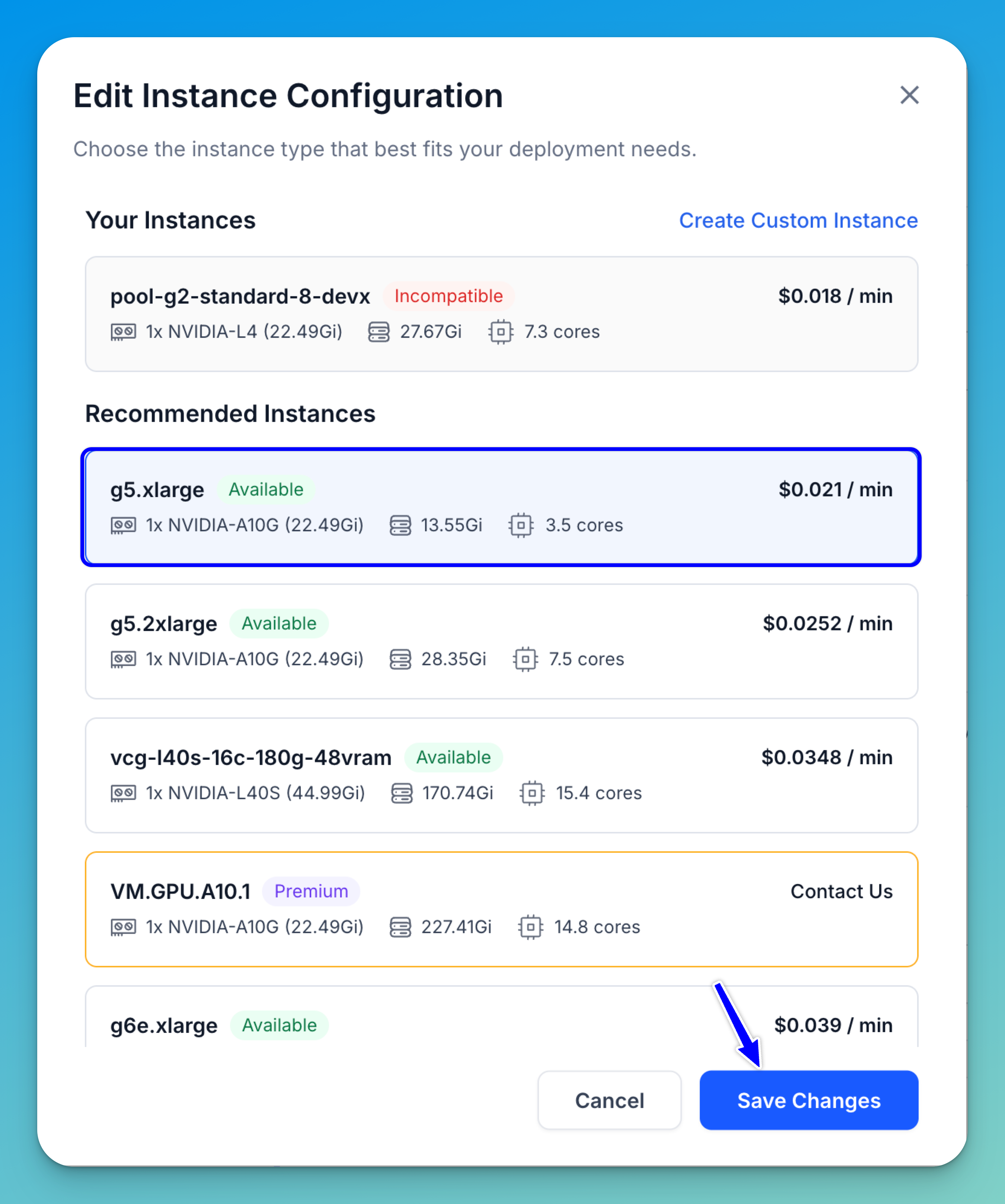

A window will appear, allowing you to select an instance type for your deployment. You can pick from your existing instances listed under Your Instances, or select from the automatically pre-configured Recommended Instances provided based on your model’s compatibility and resource needs.

Each instance option displays key details such as GPU type, available memory, CPU cores, and cost per minute. Make sure the instance you select is compatible with your model — incompatible instances will be clearly labeled in red.

If you wish to use a premium instance, click Contact Us to reach our team for manual provisioning. You can also click Create Custom Instance in the upper-right corner to configure a new instance that matches your specific requirements.

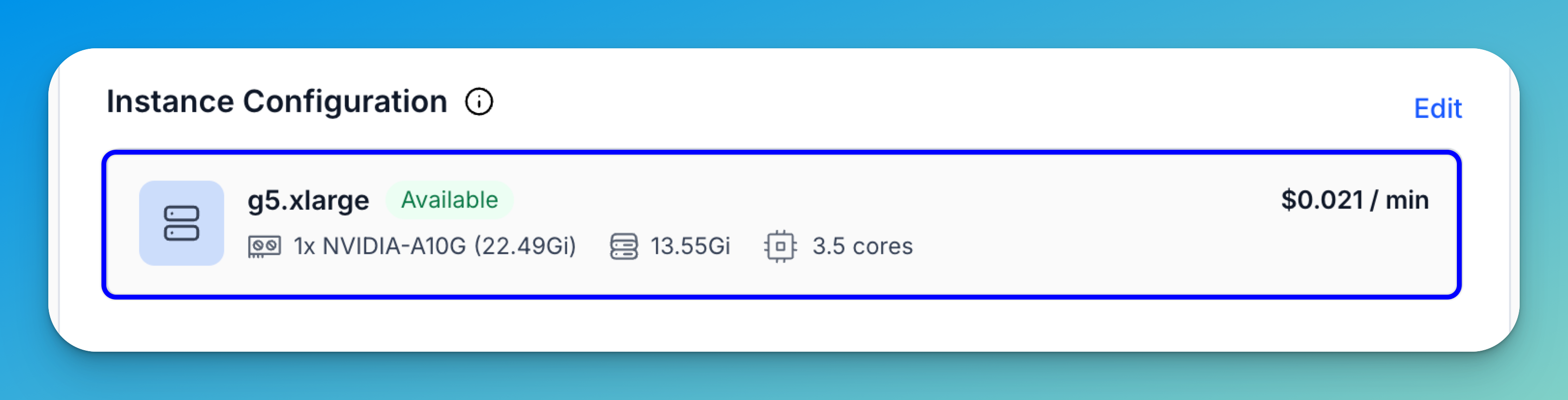

Once you’ve selected your preferred instance, click Save Changes to apply the configuration. The chosen instance will then appear in the Instance Configuration field of your deployment setup.

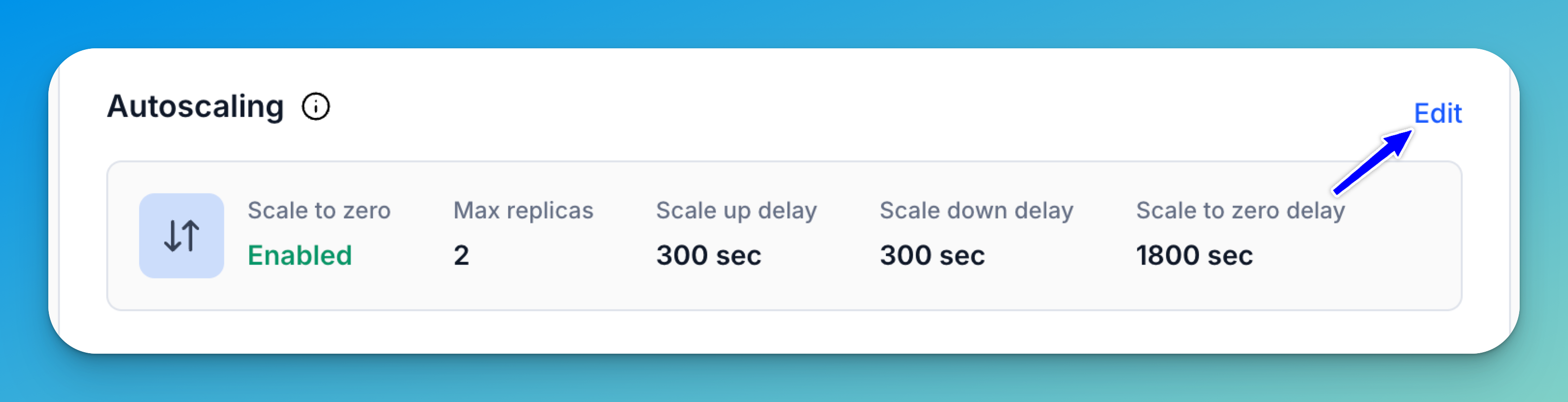

Step 4: Set Autoscaling

Next, configure how your deployment scales based on usage and demand. You can either keep the default autoscaling settings provided or customize them to better suit your workload.

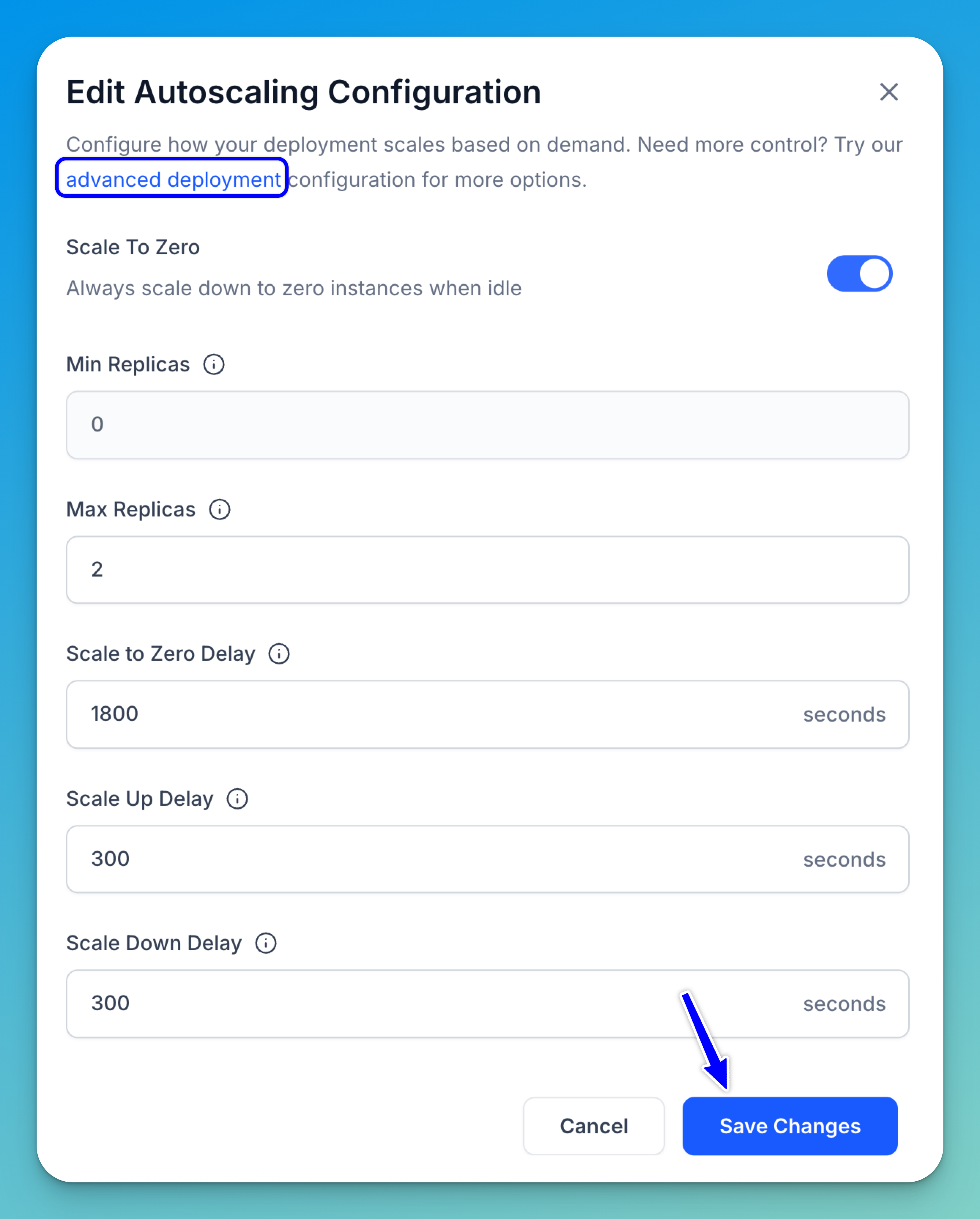

To adjust the settings, select the Autoscaling field or click the Edit button.

This opens a window where you can define how your deployment automatically scales up or down depending on traffic and resource utilization.

Once you’ve configured your preferred scaling parameters, click Save Changes to apply the updates.

These are the autoscaling settings you can configure:

- Scale To Zero — Turn this on if you want your deployment to automatically pause and use no active instances when it’s not in use.

-

Min Replicas / Max Replicas — This specifies the minimum and maximum range of model replicas to deploy, adjusting based on your performance needs and anticipated workload. Adding replicas enables horizontal scaling, where the workload is distributed across several instances of the model rather than relying on a single one. However, increasing them consumes more resources and may lead to higher costs. Each node in your nodepool can host multiple replicas, depending on model size and available resources.

Note: Your nodepool must have enough capacity to support this range.

Click here to find out how to set up node autoscaling ranges to automatically adjust your infrastructure based on traffic demand.

- Scale To Zero Delay — This sets the idle time (in seconds) before scaling down to zero replicas after inactivity.

- Scale Up Delay — This sets the waiting period (in seconds) before adding replicas in response to rising demand. Shorter delays respond faster, but may over-provision.

- Scale Down Delay — This sets the waiting period (in seconds) before removing replicas after a demand decrease. A longer delay helps avoid unnecessary scale-downs during brief drips in activity. Note that your nodepool will only scale down to the minimum number of replica(s) configured.

In the Edit Autoscaling Configuration window, you can click the Advanced Deployment link to access additional configuration options that provide greater control over your deployment behavior.

Advanced Deployment

The following settings are available for customization:

- Model — Select the model (and optionally the version) you want to deploy, as described earlier.

- Cluster — Select the cluster you want to use.

- Nodepool — Choose a nodepool to run your model.

- Deployment ID — Provide a deployment ID to uniquely identify your deployment. You can also add an optional description to provide additional context and make it easier to recognize later.

- Advanced Deployment Settings — If needed, you can configure additional advanced deployment settings. In addition to the autoscaling options described earlier, the following settings are also available for customization:

- Traffic History Timeframe — This defines the traffic history period (in seconds) that your deployment will review before making scaling decisions.

- Disable Nodepool Packing — Packing refers to placing multiple replicas on the same node to improve resource utilization and reduce costs. When set to

false(default), replicas may be packed together for efficiency. When set totrue, deployments are restricted to a single model replica per node, which can improve isolation or meet specific performance needs, but may result in underutilized nodes and higher costs.

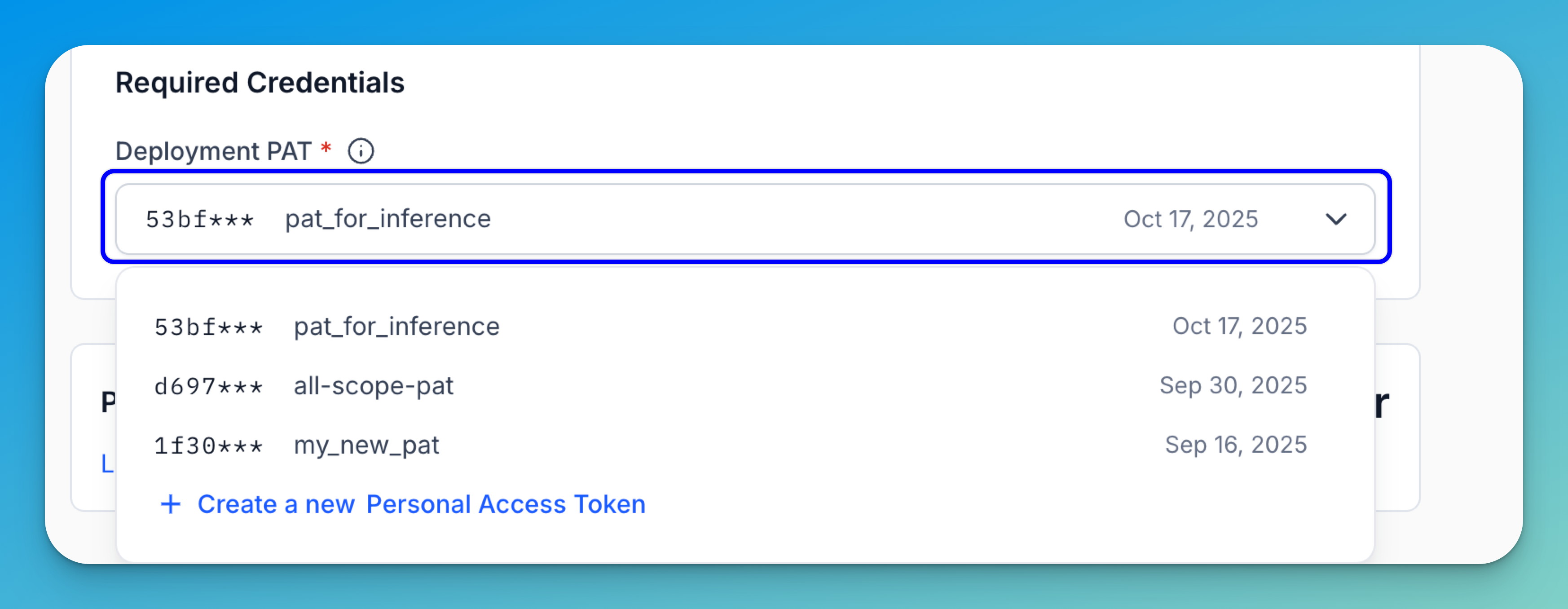

Step 5: Set Required Credentials

Select a Personal Access Token (PAT) to associate with your deployment. This token authorizes access to the resources your deployment needs to run.

Note: Be cautious when modifying the PAT after the deployment has been created, as doing so may cause the associated compute resources to stop functioning properly.

If you don’t already have a suitable PAT, you can click the button provided to generate one for this deployment.

Step 6: Create a Deployment

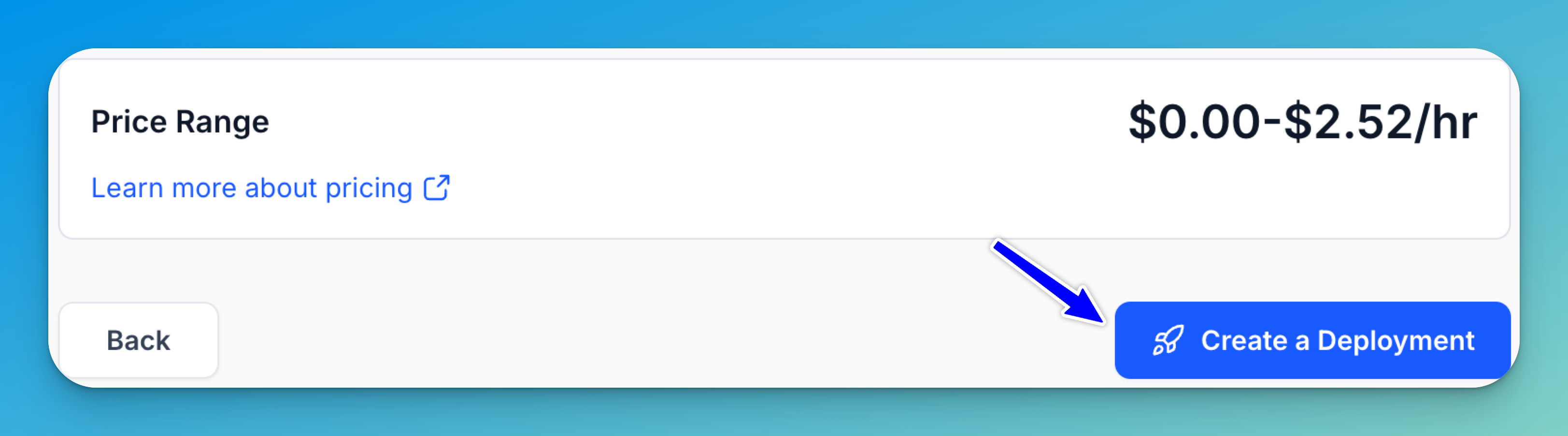

After completing all configuration steps, click Create a Deployment to launch your deployment. If a cluster or nodepool does not already exist, they will be automatically created using your specified settings, and your model will be deployed within this infrastructure.

Note: Review the price range displayed on the page to understand the estimated deployment cost. You can learn more about pricing here.

Once the deployment is created, you’ll be redirected to the nodepool page, where your deployed model will be listed. You can then start using the deployment to run inferences.

Via the CLI

The Clarifai CLI provides a one-command deployment workflow that handles all infrastructure creation automatically. No need to manually create clusters or nodepools first.

One-Command Deploy

Deploy a local model directory to the cloud in a single step:

- CLI

clarifai model deploy ./my-model --instance g5.xlarge

This uploads your model, creates a compute cluster and nodepool, deploys the model, and monitors until it's ready.

Deploy an Already-Uploaded Model

If your model is already uploaded to Clarifai, deploy it by URL:

- CLI

clarifai model deploy --model-url https://clarifai.com/user/app/models/my-model --instance g5.xlarge

Browse Available Instances

View all available hardware configurations using the dedicated list-instances command:

- CLI

# List all available instances

clarifai list-instances

# Filter by cloud provider

clarifai list-instances --cloud aws

# Filter by GPU type

clarifai list-instances --gpu L40S

# Or use the deploy flag shortcut

clarifai model deploy --instance-info

clarifai model deploy --instance-info --cloud aws

Override Instance Type

If your config.yaml already has a compute.instance value (auto-selected during model init), you can override it at deploy time. The --instance flag always takes priority over the config:

- CLI

# config.yaml has compute.instance: g5.xlarge, but deploy with a larger GPU

clarifai model deploy ./my-model --instance g6e.2xlarge

Autoscaling

Control how your deployment scales:

- CLI

clarifai model deploy ./my-model --instance g5.xlarge --min-replicas 2 --max-replicas 10

Lifecycle Management

After deployment, use these commands to manage it:

# Check status

clarifai model status --deployment <deployment-id>

# Stream logs

clarifai model logs --deployment <deployment-id>

# Remove deployment

clarifai model undeploy --deployment <deployment-id>

For the full options reference, see the CLI Reference.

Via the API

Create a Deployment

To deploy a model within a nodepool you've created, provide the deployment_id and config_filepath parameters to the create_deployment method of the Nodepool class.

You can learn how to create the deployment_config.yaml file, which contains the deployment configuration details, here.

Note:

- Each model or workflow can only have one deployment per nodepool.

- If you're creating a multi-nodepool deployment using the Python SDK, initializing the

Nodepoolinstance with the first nodepool only is sufficient.

- Python SDK

- CLI

- cURL

from clarifai.client.nodepool import Nodepool

# Set PAT as an environment variable

# export CLARIFAI_PAT=YOUR_PAT_HERE # Unix-Like Systems

# set CLARIFAI_PAT=YOUR_PAT_HERE # Windows

# Initialize the Nodepool instance

nodepool = Nodepool(

user_id="YOUR_USER_ID_HERE",

nodepool_id="test-nodepool"

)

# Create a new deployment

deployment = nodepool.create_deployment(

deployment_id="test-deployment",

config_filepath="./configs/deployment_config.yaml"

)

clarifai deployment create NODEPOOL_ID DEPLOYMENT_ID --config DEPLOYMENT-CONFIG-FILEPATH

curl -X POST "https://api.clarifai.com/v2/users/YOUR_USER_ID_HERE/deployments/" \

-H "Authorization: Key YOUR_PAT_HERE" \

-H "Content-Type: application/json" \

-d '{

"deployments": [

{

"id": "test-deployment",

"description": "test deployment",

"user_id": "YOUR_USER_ID_HERE",

"autoscale_config": {

"min_replicas": 1,

"max_replicas": 20,

"traffic_history_seconds": 100,

"scale_down_delay_seconds": 30,

"scale_to_zero_delay_seconds": 30,

"scale_up_delay_seconds": 30,

"disable_packing": false

},

"worker": {

"model": {

"id": "Llama-3_2-3B-Instruct",

"model_version": {

"id": "fe271b43266a45a5b068766b6437687f"

},

"user_id": "meta",

"app_id": "Llama-3"

}

},

"scheduling_choice": 4,

"nodepools": [

{

"id": "test-nodepool",

"compute_cluster": {

"id": "test-compute-cluster",

"user_id": "YOUR_USER_ID"

}

}

],

"visibility": {

"gettable": 10

}

}

]

}'

Example Output

[INFO] 14:45:29.871319 Deployment with ID 'test-deployment' is created:

code: SUCCESS

description: "Ok"

req_id: "sdk-python-11.7.5-1eb407b9e125478287d552fb76bc37dd"

After creating it, initialize the Deployment class by providing the user_id and deployment_id parameters.

- Python SDK

from clarifai.client.deployment import Deployment

# Initialize the deployment

deployment = Deployment(

user_id="YOUR_USER_ID_HERE",

deployment_id="test-deployment"

)

Restrict Deployments

You can specify the type of compute cluster an existing model you own is deployed to. By setting the deploy_restriction value, you can patch a model and define whether it runs on shared or dedicated resources.

These are the values you can set:

0(USAGE_RESTRICTION_NOT_SET) — The default where no explicit restriction is set.1(NO_LIMITS) — The model can be deployed on any kind of compute (shared or dedicated). There are no policy constraints.2(SHARED_COMPUTE_ONLY) — The model can only run on shared compute resources. This is typically cheaper but may have lower isolation or performance guarantees.3(DEDICATED_COMPUTE_ONLY) — The model can only run on dedicated compute resources. This is used when you need guaranteed performance, security isolation, or compliance.

- cURL

curl -X PATCH "https://api.clarifai.com/v2/users/YOUR_USER_ID_HERE/apps/YOUR_APP_ID_HERE/models" \

-H "Authorization: Key YOUR_PAT_HERE" \

-H "Content-Type: application/json" \

-d '{

"models": [

{

"id": "YOUR_MODEL_ID_HERE",

"deploy_restriction": 2

}

],

"action": "merge"

}'

Example Output

{

"status": {

"code": 10000,

"description": "Ok",

"req_id": "b6af331eac444e76b88abea88d2d4579"

},

"models": [{

"id": "upload55",

"name": "upload55",

"created_at": "2025-08-21T17:05:33.491470Z",

"modified_at": "2025-09-09T18:36:48.844230Z",

"app_id": "uploaded-models",

"model_version": {

"id": "991d5569b152462aad563cfc24faf477",

"created_at": "2025-08-21T17:05:34.086694Z",

"status": {

"code": 21100,

"description": "Model is trained and ready for deployment"

},

"completed_at": "2025-08-21T17:05:41.982881Z",

"visibility": {

"gettable": 10

},

"app_id": "uploaded-models",

"user_id": "alfrick",

"metadata": {},

"output_info": {

"output_config": {

"max_concepts": 0,

"min_value": 0

},

"message": "Show output_info with: GET /models/{model_id}/output_info",

"fields_map": {},

"params": {

"max_tokens": 512,

"secrets": [],

"temperature": 1

}

},

"input_info": {

"fields_map": {}

},

"train_info": {},

"import_info": {},

"inference_compute_info": {

"cpu_limit": "1",

"cpu_memory": "13Gi",

"cpu_requests": "1",

"cpu_memory_requests": "2Gi",

"num_accelerators": 1,

"accelerator_memory": "15Gi",

"accelerator_type": ["NVIDIA-*"]

},

"method_signatures": [{

"name": "generate",

"method_type": 2,

"description": "This method streams multiple outputs instead of returning just one.\nIt takes an input string and yields a sequence of outputs.",

"input_fields": [{

"name": "text1",

"type": 1

}],

"output_fields": [{

"name": "return",

"type": 1,

"iterator": true

}]

}]

},

"user_id": "alfrick",

"model_type_id": "text-to-text",

"visibility": {

"gettable": 10

},

"metadata": {},

"presets": {},

"toolkits": [],

"use_cases": [],

"languages": [],

"languages_full": [],

"check_consents": [],

"workflow_recommended": false,

"featured_order": 0,

"deploy_restriction": 2,

"open_router_info": {

"params": {}

}

}]

}