Base Workflows

Index your data and provide your app with a default knowledge base

The base workflow acts as the default knowledge base for your app and provides the basic structure for indexing your data. It gives you a "head start" when working with your data — by pre-indexing your inputs for search and by providing a default embedding for your custom models.

How it Works

Your base workflow makes your data sortable and searchable as soon as it is uploaded. When you add an input to your app, the base workflow of your app computes the outputs from the model(s) in that workflow and indexes those outputs.

A base workflow usually contains an embedding model, which indexes the inputs. Those indexed outputs enable search and transfer-learn based training on top of the base workflow model(s).

How to Choose a Base Workflow

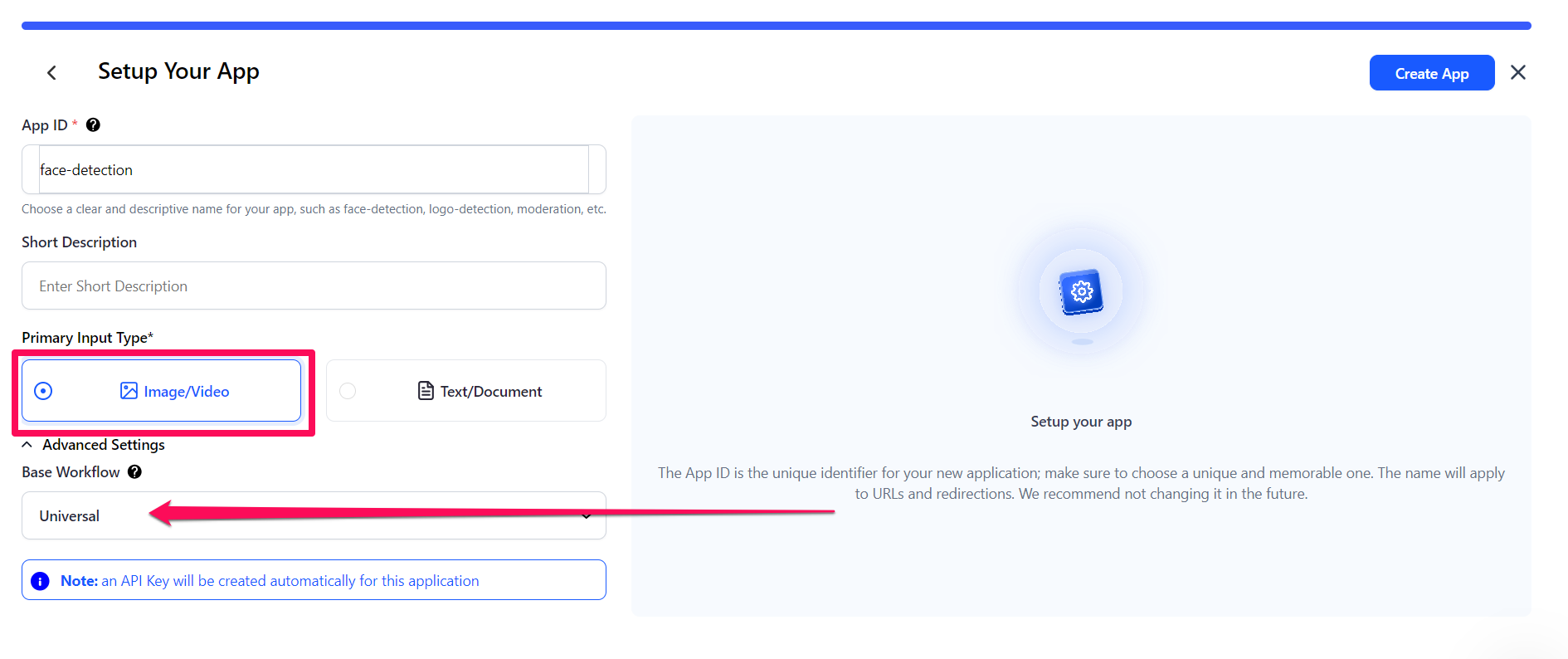

You can choose a base workflow when creating a new application. After selecting the primary input type for your app, the base workflow will be automatically selected for you — within the collapsible Advanced Settings field.

Alternatively, you can select the one that aligns most with your specific use case from the drop-down list accessible when you click the workflows search box.

-

If you select Image/Video as the primary input type when creating an application, Universal will automatically be set as the default base workflow. If you select Text/Document as the input type, Text will be set as the default base workflow.

-

If you create an application via the API, Universal will be set as the default base workflow unless you specify a different one.

How to Change a Base Workflow

You can change the base workflow once your app is created. You can choose a public workflow available in the Clarifai app here or a workflow you've created in your app.

Update via the UI

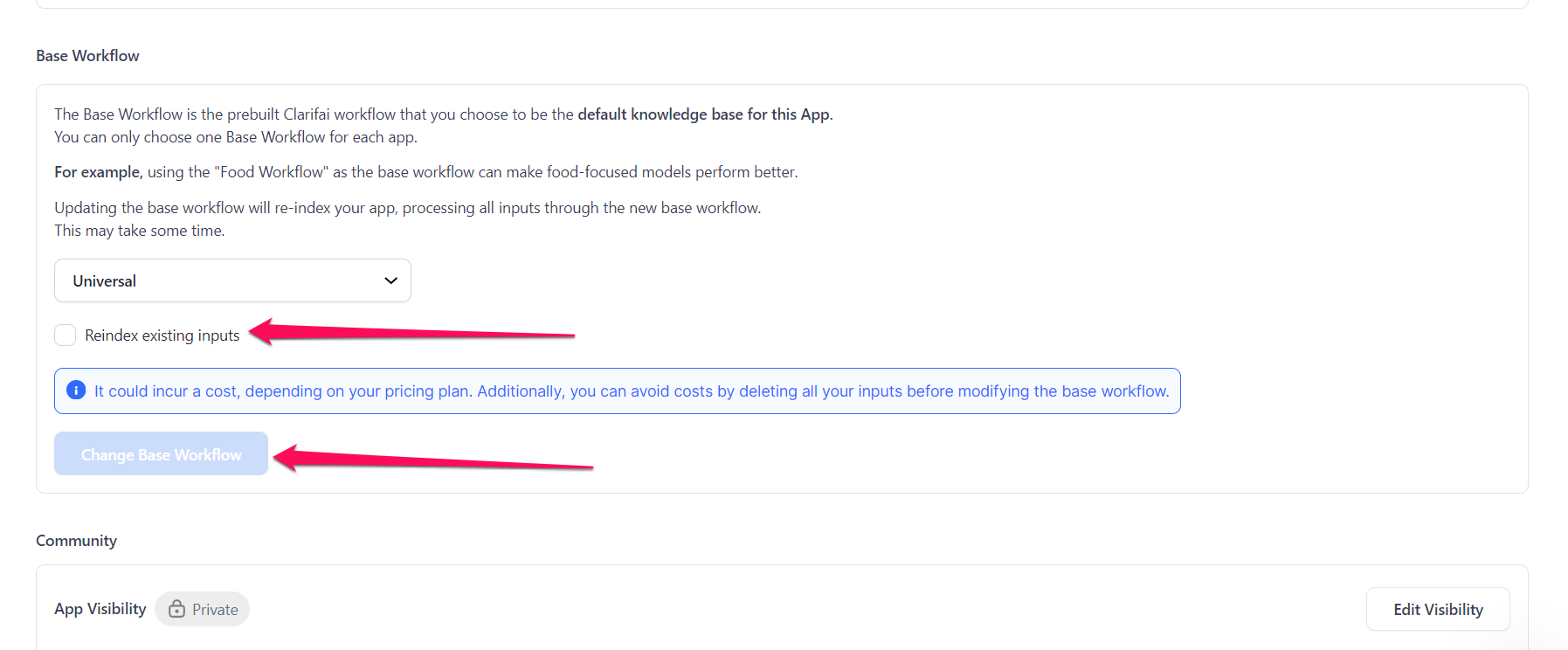

To change it, go to your App Settings page and select the one you prefer from the list that drops down when you click the workflows search box.

After selecting your preferred base workflow, click the Change Base Workflow button.

- You can update the base workflow and choose to reindex existing inputs in your app. Reindexing will process all inputs through the new base workflow. This may take some time, and could incur costs. To avoid reindexing, do not click the Reindex existing inputs checkbox.

- During reindexing, existing data from the old base workflow isn't deleted. This means you can easily revert back to the old base workflow if needed.

Update via the API

Note that, as previously mentioned, updating the base workflow will re-index your app, processing all inputs through the new base workflow.

This may take some time, and could incur costs. You could avoid the costs by deleting all your inputs before updating the base workflow.

- Python (gRPC)

- Node.js (gRPC)

- Java (gRPC)

- cURL

########################################################################

# In this section, we set the user authentication, app ID, and default

# workflow ID. Change these strings to run your own example.

########################################################################

USER_ID = 'YOUR_USER_ID_HERE'

# Your PAT (Personal Access Token) can be found in the Account's Security section

PAT = 'YOUR_PAT_HERE'

APP_ID = 'YOUR_APP_ID_HERE'

# Change this to update your default workflow

DEFAULT_WORKFlOW_ID = 'auto-annotation-workflow-id'

##########################################################################

# YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

##########################################################################

from clarifai_grpc.channel.clarifai_channel import ClarifaiChannel

from clarifai_grpc.grpc.api import resources_pb2, service_pb2, service_pb2_grpc

from clarifai_grpc.grpc.api.status import status_code_pb2

channel = ClarifaiChannel.get_grpc_channel()

stub = service_pb2_grpc.V2Stub(channel)

metadata = (('authorization', 'Key ' + PAT),)

userDataObject = resources_pb2.UserAppIDSet(user_id=USER_ID, app_id=APP_ID)

patch_apps_response = stub.PatchApps(

service_pb2.PatchAppsRequest(

user_app_id=userDataObject,

action="overwrite",

apps=[

resources_pb2.App(

id=APP_ID,

default_workflow_id=DEFAULT_WORKFlOW_ID

)

]

),

metadata=metadata

)

if patch_apps_response.status.code != status_code_pb2.SUCCESS:

print(patch_apps_response.status)

raise Exception("Patch apps failed, status: " + patch_apps_response.status.description)

//index.js file

/////////////////////////////////////////////////////////////////////////////

// In this section, we set the user authentication, app ID, and default

// workflow ID. Change these strings to run your own example.

/////////////////////////////////////////////////////////////////////////////

const USER_ID = 'YOUR_USER_ID_HERE';

// Your PAT (Personal Access Token) can be found in the Account's Security section

const PAT = 'YOUR_PAT_HERE';

const APP_ID = 'YOUR_APP_ID_HERE';

// Change this to update your default workflow

const DEFAULT_WORKFlOW_ID = 'auto-annotation-workflow-id';

/////////////////////////////////////////////////////////////////////////////

// YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

/////////////////////////////////////////////////////////////////////////////

const { ClarifaiStub, grpc } = require("clarifai-nodejs-grpc");

const stub = ClarifaiStub.grpc();

// This will be used by every Clarifai endpoint call

const metadata = new grpc.Metadata();

metadata.set("authorization", "Key " + PAT);

stub.PatchApps(

{

user_app_id: {

"user_id": USER_ID,

"app_id": APP_ID

},

action: "overwrite",

apps: [

{

id: APP_ID,

default_workflow_id: DEFAULT_WORKFlOW_ID

}

]

},

metadata,

(err, response) => {

if (err) {

throw new Error(err);

}

if (response.status.code !== 10000) {

console.log(response.status);

throw new Error("Patch apps failed, status: " + response.status.description);

}

}

);

package com.clarifai.example;

import com.clarifai.grpc.api.*;

import com.clarifai.grpc.api.status.StatusCode;

import com.clarifai.channel.ClarifaiChannel;

import com.clarifai.credentials.ClarifaiCallCredentials;

public class ClarifaiExample {

/////////////////////////////////////////////////////////////////////////////

// In this section, we set the user authentication, app ID, and default

// workflow ID. Change these strings to run your own example.

/////////////////////////////////////////////////////////////////////////////

static final String USER_ID = "YOUR_USER_ID_HERE";

//Your PAT (Personal Access Token) can be found in the portal under Authentication

static final String PAT = "YOUR_PAT_HERE";

static final String APP_ID = "YOUR_APP_ID_HERE";

// Change this to update your default workflow

static final String DEFAULT_WORKFlOW_ID = "auto-annotation-workflow-id";

///////////////////////////////////////////////////////////////////////////////////

// YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE

///////////////////////////////////////////////////////////////////////////////////

public static void main(String[] args) {

V2Grpc.V2BlockingStub stub = V2Grpc.newBlockingStub(ClarifaiChannel.INSTANCE.getGrpcChannel())

.withCallCredentials(new ClarifaiCallCredentials(PAT));

MultiAppResponse patchAppsResponse = stub.patchApps(

PatchAppsRequest.newBuilder()

.setUserAppId(UserAppIDSet.newBuilder().setUserId(USER_ID).setAppId(APP_ID))

.setAction("overwrite")

.addApps(

App.newBuilder()

.setId(APP_ID)

.setDefaultWorkflowId(DEFAULT_WORKFlOW_ID)

).build()

);

if (patchAppsResponse.getStatus().getCode() != StatusCode.SUCCESS) {

throw new RuntimeException("Patch apps failed, status: " + patchAppsResponse.getStatus());

}

}

}

curl -X PATCH "https://api.clarifai.com/v2/users/YOUR_USER_ID_HERE/apps/" \

-H "Authorization: Key YOUR_PAT_HERE" \

-H "Content-Type: application/json" \

--data-raw '{

"action": "overwrite",

"apps": [

{

"id": "YOUR_APP_ID_HERE",

"default_workflow_id": "auto-annotation-workflow-ID"

}

]

}'

Base Workflows Use Cases

You should go for a workflow that optimizes your custom model performance. For example, if you're training a custom model around food, you could choose the Food model as your Base Workflow to yield better results.

Below is a table outlining the potential use cases for various base workflows. You can refer to this table to determine the workflow that best suits your needs.

Empty

| Workflow | Use Case |

|---|---|

| Empty | Empty workflow for apps that do not need inputs to be indexed for search and/or transfer learning. For example, if the app is used for deep training and/or labeling, you can set the base workflow as Empty to speed up the input upload process; later, you can change to another workflow to index your app. |

Text Workflows

| Workflow | Use Case |

|---|---|

| Text | A single-model workflow of text embedding model for general English text |

| BAAI-General-Embedding-Base-en | A workflow for text search |

| Universal | A universal workflow for text and image search |

| Roberta-embedder | |

| MSMARCO-Distilbert-Text-Search | Text search workflow for TREC (Text REtrieval Conference) 2022 |

| Text-Moderation | A single-model text moderation workflow that combines classification and embedding functions to classify harmful text content |

Image Workflows

| Workflow | Use Case |

|---|---|

| Demographics | Multi-model workflow that detects, crops, and recognizes demographic characteristics of faces. Visually classifies age, gender, and multi-culture |

| Universal | A universal workflow for text and image search |

| Apparel | |

| Face-V4 | |

| General-Detection | A general image detection workflow that detects a variety of common objects, and enables visual search using general embeddings on detected regions |

| OCR-Translation-English-Spanish | |

| Face-Sentiment | Multi-model workflow that combines face detection and sentiment classification of 7 concepts: anger, disgust, fear, neutral, happiness, sadness, contempt, and surprise |

| Wedding | Visually classify wedding-related content and enable visual search using specific embeddings |

| Food | A workflow that combines detection, classification, and embedding functions to visually classify food items and enable visual search using embeddings |

| Moderation | An image moderation workflow that combines detection, classification, and embedding to classify harmful content and enable visual search using embeddings |

| Travel | A workflow that combines detection, classification, and embedding to classify travel-related properties and items, and enable visual search using embeddings |

| Face | A workflow that combines detection, recognition, and embedding to generate face landmarks and enable visual search using detected faces' embeddings |

| General | A general image workflow that combines detection, classification, and embedding to identify general concepts, including objects, themes, moods, etc. |

| Visual-Segmenter | Generates image segmentation masks for concepts in an image |

| Segmentation | |

| Person-Tracking | |

| Person-Tracking-Lite | |

| Vehicle-Detection0 |

Audio Workflows

| Workflow | Use Case |

|---|---|

| ASR-Sentiment | Obtains the sentiment of an audio. Takes audio as input, runs an Audio Speech Recognition (ASR) model, and uses the resulting text as input to a text sentiment model |

| Audio-Sentiment | Obtains the sentiment of an audio. Takes audio as input, runs an Audio Speech Recognition (ASR) model, and uses the resulting text as input to a text sentiment model |

| ASR-Summarization | Summarize text from English audio |